Intro to RAG and LlamaIndex

This post is my summarized interpretation of what I read in the LlamaIndex documentation.

What is LlamaIndex?

LlamaIndex provides abstractions to make the ingestion, structuring, and access of data more straightforward, enabling seamless integration into LLMs for more accurate text generation.

While LLMs are trained on extensive datasets, they lack training on private or domain-specific data. Fine-tuning a LLM with your data is costly, making it challenging to keep the model up-to-date with the latest information. Additionally, it often lacks observability.1

What is RAG?

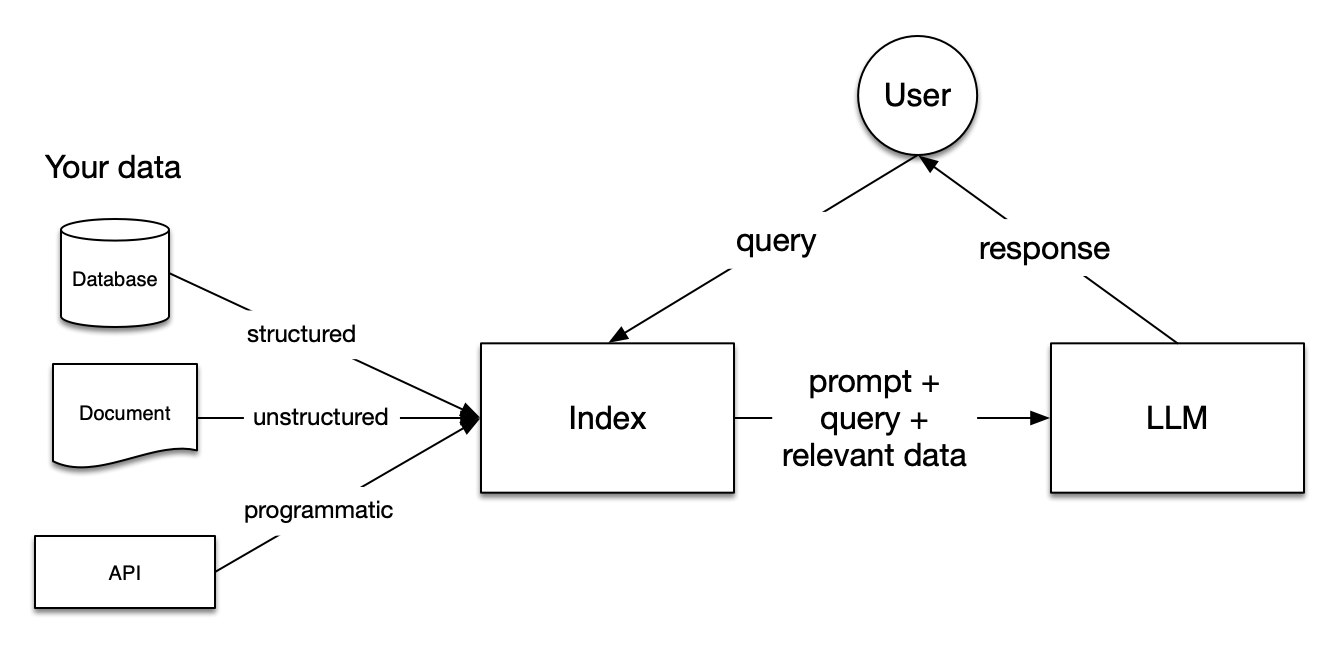

Retrieval-Augmented Generation (RAG) provides an alternative. This technique focuses on context augmentation to achieve more precise text generation tailored to your specific data. A simple way to describe RAG is that it is a method to improve domain-specific responses of LLMs.

Key steps in RAG implementation:

Get Information: Nodes serve as the atomic unit of data, and connectors assist in ingesting these nodes.

Index and Add to Query as Context: This involves generating vector embeddings and incorporating them into your query as context.

Pass Improved Prompt to LLM: Further details will be covered in upcoming posts, addressing querying, retrievers, routers, postprocessors, and synthesizers.

The beauty of RAG lies in its absence of training, ensuring it’s always up-to-date by adding your data to the existing data accessible to LLMs. Another vital aspect is the ability to store your indexes, mainly accomplished through specialized databases known as vector stores. Finally a RAG framework must effectively perform proper evaluation on the accuracy of the responses.2

Source: LLamaIndex Documentation. RAG Diagram

Source: LLamaIndex Documentation. RAG Diagram

LlamaIndex Tools

Data Connectors: Facilitate data ingestion.

Data Indexes: Provide representations of the data usable in LLMs.

Engines: Serve as end-to-end pipelines for interaction with LLMs.

Data Agents: LLM-based decision-makers offering flexibility to tackle complex problems.

Application Integrations: Enable seamless integration of LlamaIndex into other frameworks.